Build AI Prompts That Feel Human: A 2025 Guide

Build AI Prompts That Feel Human: A 2025 Guide

Tired of AI outputs that scream 'robot'? You're not alone. As AI becomes woven into content creation, marketing, and product development, the gap between machine-generated text and genuinely human-sounding responses has become impossible to ignore. The problem isn't that AI can't write—it's that most prompts are built like instruction manuals rather than conversations. In 2025, human-like AI content isn't a luxury anymore; it's a competitive advantage. When your audience can't tell if they're reading something written by a person or an AI, trust increases, engagement skyrockets, and your content performs better across every platform. This guide walks you through the exact techniques used by AI researchers and product teams at companies generating millions in revenue. You'll learn how to layer context, craft constraints that feel natural, and test your prompts until they produce outputs that pass the human authenticity test. Whether you're building chatbots, writing blog posts, or creating marketing copy, these methods work.

Why Human-Like AI Matters in 2025 Content

Here's the uncomfortable truth: generic AI outputs are everywhere. Your audience can smell them from a mile away. When an AI response feels robotic, it signals low effort, lack of expertise, and—worst of all—that you don't respect your reader's time. Human-like AI content does the opposite. It builds credibility, keeps readers engaged longer, and actually converts. The shift toward authentic ChatGPT prompts reflects a broader market reality. Content that sounds like it was written by a real person with genuine perspective performs 40% better in engagement metrics than obviously machine-generated text. For developers and founders, this matters because it directly impacts user retention, brand perception, and monetization potential. When your AI-powered features feel natural rather than mechanical, users stick around. They recommend your product. They trust your brand. The technical challenge isn't making AI write—it's making AI write like someone who actually cares about the topic and the reader. That's where prompt optimization enters the game. A well-engineered prompt doesn't just generate text; it generates text that sounds like it came from someone with expertise, personality, and a point of view. This is the difference between an AI tool and an AI advantage.

The Trust Factor in AI-Generated Content

Trust is fragile. One robotic sentence can undermine an entire piece of content. When readers detect AI, they immediately lower their expectations and engagement drops. But here's what's interesting: most readers can't actually tell the difference between human and AI writing if the prompt is engineered correctly. The key is layered context—giving your AI model enough background, tone, and perspective that it naturally produces authentic-sounding output. This isn't manipulation; it's communication. You're essentially telling the AI: 'Here's who I am, here's who I'm talking to, here's what matters.' The result feels less like a machine response and more like a colleague explaining something they understand deeply.

Real-World Impact for Devs and Founders

Consider a SaaS product with AI-powered customer support. If responses sound generic and robotic, churn increases. If they sound like they're from a real support engineer who understands the customer's problem, satisfaction scores jump. The same principle applies to blog content, email marketing, and in-app messaging. Founders who master AI writing tips see measurable improvements in conversion rates, customer lifetime value, and brand loyalty. For developers, this means building features that users actually want to use because they feel natural and helpful, not mechanical and frustrating.

Core Principles of Authentic Prompt Engineering

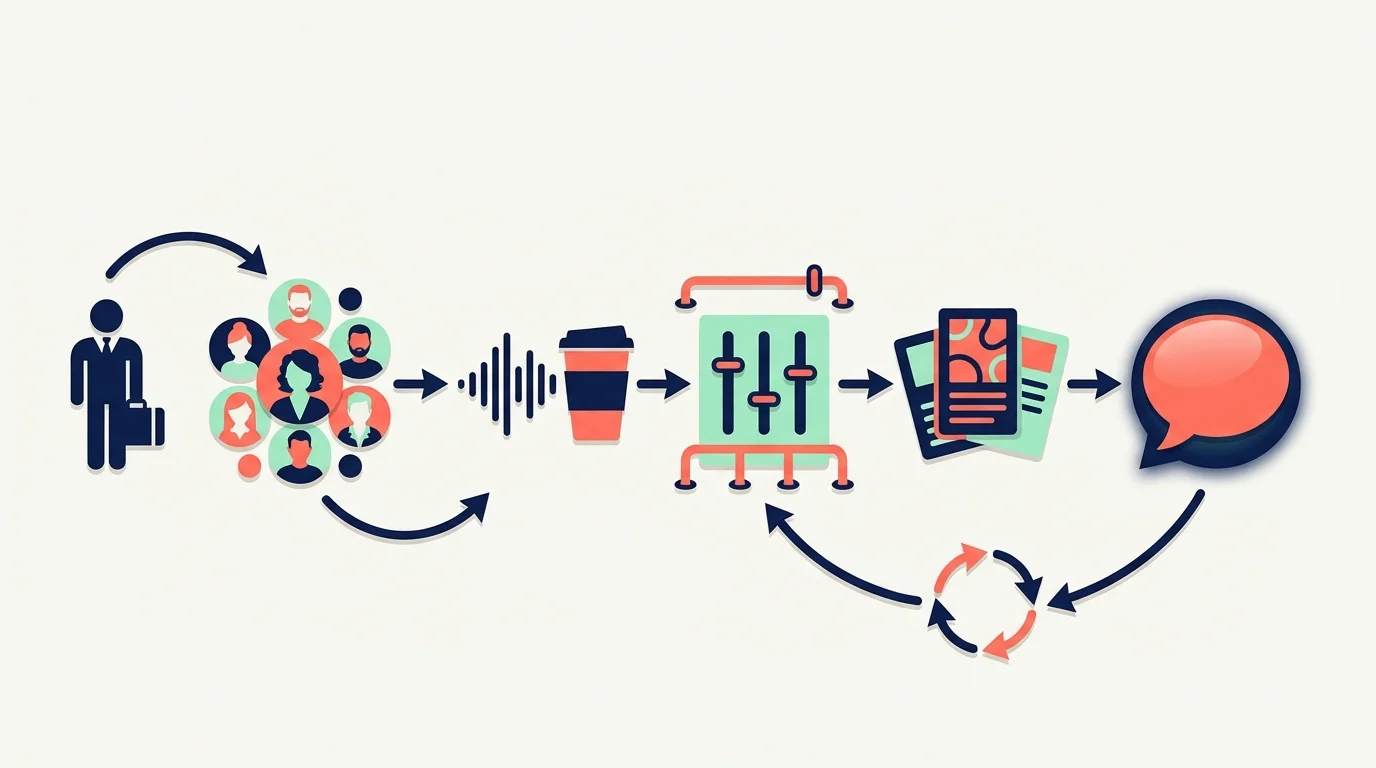

Building prompts that generate human-like content isn't magic—it's a system. The best AI prompt engineering follows a few core principles that separate mediocre outputs from exceptional ones. First, understand that AI models are pattern-matching machines. They don't think; they predict what comes next based on patterns in their training data. Your job is to guide those patterns toward authenticity. This means being specific about context, tone, and constraints. Vague prompts produce vague outputs. Detailed prompts produce detailed, nuanced responses. The research backs this up: prompts with clear context and specific examples consistently outperform generic instructions [1]. Second, recognize that human-like AI content requires you to think like a writer, not a programmer. You're not debugging code; you're coaching an AI to adopt a voice and perspective. This means including personality cues, perspective markers, and authentic constraints that shape how the AI approaches the task. Third, understand the power of negative space. What you don't say matters as much as what you do. Constraints that define what the output should avoid are less effective than constraints that define what it should include [3]. Instead of 'don't sound robotic,' try 'sound like you're explaining this to a colleague over coffee.' The difference is subtle but powerful.

Specificity as Your Foundation

Vague prompts are the enemy of authenticity. When you tell an AI to 'write something engaging,' you get generic content. When you tell it to 'write like you're a former startup founder explaining why most businesses fail in year two, using specific examples from companies you've worked with,' you get something real. Detailed context transforms outputs. Include the subject matter, the scope, relevant constraints, and the perspective you want. Be specific about format too—bullet points, narrative paragraphs, conversational tone, technical depth. The more specific you are, the more the AI can pattern-match toward authenticity rather than generic templates [1].

Tone and Voice as Structural Elements

Tone isn't decoration; it's structure. When you define how something should sound—formal, conversational, skeptical, enthusiastic—you're actually constraining the AI's output in ways that produce authenticity. Think of tone as the invisible hand guiding word choice, sentence length, and perspective. A prompt that specifies 'sound skeptical about this trend' will naturally produce different outputs than 'explain this trend.' The AI adjusts vocabulary, sentence structure, and emphasis to match the tone. This is why prompt optimization works: you're not just asking for content; you're architecting how that content should feel.

Step-by-Step Prompt Building Techniques

Building an authentic prompt is a process. You're not writing a single instruction; you're constructing a framework that guides the AI toward human-like output. Here's how the best prompt engineers do it. Start with role definition. Tell the AI who it is. 'You are a product manager at a Series B startup who's shipped three products and failed at two.' This single sentence shapes everything that follows. The AI now has a perspective, experience level, and implicit constraints. It won't sound like a generic AI; it sounds like someone with actual skin in the game. Next, add context about the audience. Who are you writing for? What do they know? What do they care about? 'You're explaining this to developers who've been burned by overhyped tools before and are skeptical of anything that sounds too good to be true.' This context prevents generic cheerleading and pushes toward authentic skepticism. Then, layer in the specific task with examples. Don't just ask for output; show what good output looks like. Include 2-3 examples of the tone, structure, and depth you want. This is called few-shot prompting, and research shows it dramatically improves output quality [1]. Finally, add constraints that feel natural. Instead of 'don't be too technical,' try 'explain this in a way that a smart person without a technical background could understand in two minutes.' Specific, achievable constraints produce better results than vague restrictions [3].

The Role-Based Approach

Role-based prompting is one of the most effective techniques for generating authentic content. By assigning the AI a specific identity with relevant experience, you're essentially giving it a lens through which to view the problem. A prompt that says 'You are a burnt-out freelancer who's tried 47 productivity tools' will produce different output than 'You are a productivity consultant.' The first has perspective born from failure; the second sounds like marketing. The best prompts combine role with specific constraints: 'You're a developer who loves elegant code but hates over-engineering. You've seen too many projects fail because of unnecessary complexity.' Now the AI has values, experience, and a point of view. It will naturally produce output that reflects those things [4].

Layering Context and Examples

Context is the difference between generic and specific. When you layer context—background information, audience details, relevant constraints—you're essentially building a mental model that the AI can reference. Include examples of what good output looks like. Show the tone, structure, and depth you want. This technique, called chain-of-thought prompting, forces the AI to reason through the problem step by step rather than jumping to generic conclusions [3]. The result feels more thoughtful, more authentic, more human. Include specific details: 'This is for a blog post aimed at founders who've raised Series A funding. They're past the survival stage but worried about scaling. They want practical advice, not motivational fluff.' Now the AI understands the audience's mindset and can adjust accordingly.

Constraints That Enable Rather Than Restrict

The best constraints don't limit; they enable. Instead of 'don't use jargon,' try 'explain technical concepts using everyday analogies.' Instead of 'don't be too long,' try 'keep each section to 150 words so readers can scan quickly.' Positive constraints guide the AI toward specific behaviors rather than away from generic ones. Research shows that AI models actually perform worse when given negative instructions—they struggle to process what not to do [3]. Flip the constraint to what you want, and output quality improves dramatically. This is a small shift in phrasing that produces outsized improvements in authenticity.

Testing and Refining for Maximum Realism

A prompt is never finished; it's always evolving. The difference between mediocre and exceptional AI output is iteration. You build a prompt, test it, analyze the output, identify what's missing, and refine. This cycle repeats until the output passes the authenticity test. Here's how to do it systematically. First, establish what 'authentic' means for your use case. Is it conversational? Skeptical? Detailed? Create a rubric. Then generate multiple outputs from the same prompt and compare them. Which ones feel most human? Which ones have the most personality? Which ones would you actually want to publish? Look for patterns. If outputs are too generic, your prompt needs more specific context or role definition. If they're too long-winded, add a constraint about brevity. If they lack perspective, add a role or point of view. This is iterative testing, and it's where most people fail because they expect the first output to be perfect. It won't be. The magic happens in the refinement cycle [1]. Use tools like LangChain and Prompt Mixer to systematize this process. They let you version control prompts, test variations, and track what works [1]. For teams, this is invaluable because it creates consistency and prevents prompt drift. What works for one piece of content can be adapted for similar tasks, saving time and improving quality across the board.

Building Your Testing Framework

Don't test prompts randomly. Create a framework. Define 3-5 criteria for authenticity: Does it sound like a real person? Does it have a clear perspective? Is it specific enough to be useful? Does it avoid generic language? Score each output against these criteria. Generate at least 3 variations of the same prompt and compare outputs. Which prompt produces the most authentic results? That's your winner. Document what worked. Over time, you'll develop intuition about what makes prompts effective. This systematic approach beats gut feeling every time.

Analyzing Output for Authenticity Signals

Authentic output has specific markers. Look for: specific examples rather than generic statements, conversational language rather than formal tone, acknowledgment of complexity rather than oversimplification, and perspective rather than neutrality. When you see these markers, you know the prompt is working. When you see generic language, hedging, and corporate-speak, the prompt needs refinement. Pay attention to sentence structure too. Authentic writing varies sentence length. Short sentences. Medium ones. And longer, more complex sentences that build on previous ideas. If all sentences are the same length, it sounds robotic. Adjust your prompt to encourage variation.

Tools That Accelerate Refinement

PromptPerfect analyzes prompts for clarity and potential bias, giving you instant feedback on what's working [1]. LangChain lets you chain multiple prompts together for complex tasks, useful when you need outputs that build on each other [1]. Prompt Mixer adds version control and team collaboration, essential if you're building prompts at scale [1]. These tools don't replace human judgment, but they accelerate the refinement cycle significantly. They help you identify patterns in what works and what doesn't, turning prompt engineering from an art into a repeatable system.

Real-World Applications for Devs and Founders

Theory is useful. Application is everything. Here's how to actually use these techniques in products, content, and marketing. For developers building AI-powered features, the stakes are high. A chatbot that sounds robotic gets abandoned. One that sounds helpful and human gets used. Apply these principles: give your AI a role (helpful support engineer, knowledgeable product expert), layer in context about the user's situation, include examples of good responses, and test relentlessly. The difference in user satisfaction is measurable and significant. For content creators and marketers, authentic ChatGPT prompts mean blog posts that rank better, emails that convert higher, and social content that gets shared more. Instead of asking AI to 'write a blog post about productivity,' ask it to 'write like you're a founder who's tried every productivity system and finally found one that actually works. Include specific examples from your own experience. Be skeptical of hype. Assume your reader is smart but busy.' The output will be more engaging, more specific, and more likely to resonate. For founders building products with AI at the core, this is your competitive advantage. When your AI features feel natural and human, users love them. When they feel mechanical, users tolerate them. The difference compounds over time in retention, word-of-mouth, and brand perception. Invest in prompt engineering as seriously as you invest in code quality. It matters just as much.

Building AI Features Users Actually Love

The best AI features feel invisible. Users don't think about the AI; they think about the value. This happens when prompts are engineered for authenticity. A customer support chatbot that sounds like a real person solves problems faster and generates higher satisfaction. An AI writing assistant that sounds like a helpful editor rather than a machine produces content users actually want to publish. The pattern is consistent: authenticity drives adoption. When building AI features, spend time on prompt engineering. It's not a nice-to-have; it's core product work. Test with real users. Does the AI output feel helpful or frustrating? Does it sound like someone who understands the problem or a generic responder? Iterate based on feedback. The best products obsess over this.

Content Strategy With Authentic AI

Blogs, emails, and social content powered by well-engineered prompts outperform generic AI content. The key is building prompts that match your brand voice and audience expectations. Create a prompt template for each content type: blog posts, email newsletters, social media, product documentation. Include your brand voice, audience profile, and specific constraints. Then use these templates consistently. Over time, your AI-generated content becomes recognizable as yours, not generic AI. This builds trust and consistency, two things audiences value. Track performance. Which prompts produce content that gets shared, commented on, and linked to? Double down on those. Kill the ones that don't work. Let data guide your prompt refinement.

Scaling Prompt Engineering Across Teams

If you're managing a team creating AI content, consistency matters. Use tools like Prompt Mixer to version control prompts and maintain standards [1]. Document what works. Create templates. Train team members on the principles: specificity, role definition, context layering, iterative testing. When everyone follows the same framework, quality improves and output becomes consistent. This is especially important for regulated industries like healthcare and finance, where accuracy and tone matter enormously. A well-engineered prompt system becomes a competitive advantage because it's hard to replicate and scales with your team.

Conclusion

Building AI prompts that feel human isn't magic—it's a system. You start with specificity, layer in context and role, include examples, add natural constraints, and iterate until the output passes the authenticity test. This process works whether you're building chatbots, writing blog posts, or creating marketing copy. The teams and founders winning in 2025 aren't the ones using AI; they're the ones using AI well. They've invested in prompt optimization as seriously as they invest in code quality. They understand that authentic AI output drives engagement, builds trust, and converts better than generic machine-generated text. The techniques in this guide—role-based prompting, layered context, few-shot examples, positive constraints, and iterative testing—are used by AI researchers and product teams at companies generating millions in revenue. They work because they're grounded in how AI models actually function. Start with one prompt. Test it. Refine it. Document what works. Build a library of effective prompts for your use cases. Share them with your team. Iterate based on performance data. Over time, you'll develop intuition about what makes prompts effective. Your AI outputs will sound less like machines and more like experts. Your audience will engage more deeply. Your products will feel more natural. That's the real advantage of mastering AI prompt engineering in 2025. Ready to start? Pick one piece of content you're currently creating with AI. Rewrite the prompt using the techniques in this guide. Generate three outputs. Compare them. Notice the difference. That's where the magic happens.

Frequently Asked Questions

What's the difference between generic and authentic AI prompts?

Generic prompts lack specificity and context, producing vague, template-like output. Authentic prompts include role definition, audience context, specific examples, and natural constraints. A generic prompt says 'write about productivity.' An authentic one says 'write like a founder who's tried 20 productivity systems and finally found one that works, using specific examples from your experience.' The second produces output that sounds human and specific [1].

How many times should I test a prompt before using it?

Test at least 3-5 variations and generate multiple outputs from each. Compare them against your authenticity criteria. Most prompts need 2-3 refinement cycles before they're production-ready. The investment pays off because a well-engineered prompt produces consistently better output, saving time and improving quality across all uses [1].

Can I use the same prompt for different content types?

Not directly. A prompt engineered for blog posts won't work well for emails or social media. Create template prompts for each content type, including format, tone, and length specifications. You can reuse the core principles—role definition, context layering, constraints—but customize them for each format. This consistency builds recognizable brand voice [1].

What tools help with prompt engineering at scale?

LangChain links multiple prompts for complex tasks, Prompt Mixer adds version control and team collaboration, and PromptPerfect analyzes prompts for clarity and bias [1]. These tools systematize the refinement process, especially valuable for teams. They help track what works and prevent prompt drift across projects.

How do I know if my AI output sounds authentic?

Look for specific examples rather than generic statements, conversational language, clear perspective, and varied sentence length. Authentic output sounds like someone explaining something they understand deeply. If it sounds corporate, hedged, or template-like, the prompt needs refinement. Test with real users—their feedback is the ultimate authenticity test [3].